Davos Elites Warn That Disinformation Is an Existential Threat to Their Influence

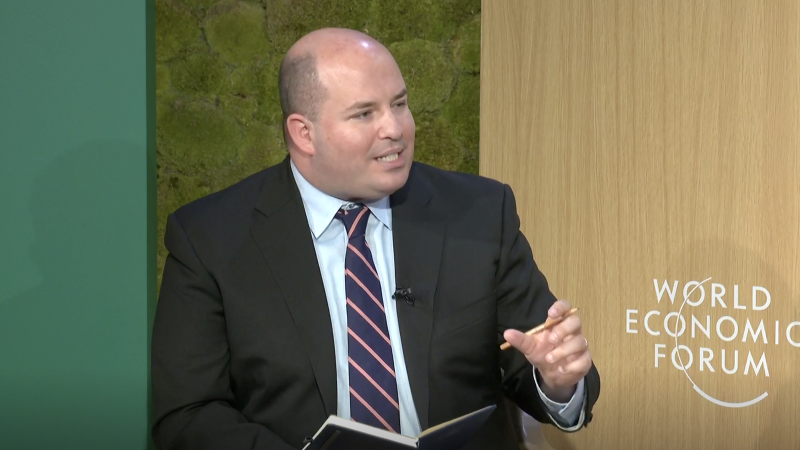

At the World Economic Forum, Brian Stelter and panelists discuss why everything is Facebook's fault.

The World Economic Forum—an annual meeting of international business leaders, political figures, and other elites—is well underway in Davos, Switzerland. One of the topics of discussion on Monday was "The Clear and Present Danger of Disinformation," presented by former CNN host Brian Stelter.

Stelter and his panelists did elucidate several pressing dangers with respect to rampant disinformation on social media; quite inadvertently, they also highlighted the inherent drawbacks of adopting a permanent war-footing approach to stopping disinformation. Indeed, several of the panelists spread inaccurate information during the course of their remarks.

These panelists were Vera Jourová, a member of the European Union's executive cabinet, the European Commission; Jeanne Bourgault, who helms a nonprofit group that supports independent media; Rep. Seth Moulton (D–Mass.); and A.G. Sulzberger, publisher of The New York Times and nepo baby.

Stelter kicked off the discussion by framing "disinformation" as the central conundrum of our times—all other problems being downstream of this issue. Sulzberger wholeheartedly embraced this view.

"I think it maps, basically, to every other major challenge that we are grappling with as a society, and particularly the most existential among them," he said, lumping in disinformation—false information, intended to mislead people—with "conspiracy, propaganda, and clickbait." Disinformation is why society seems so fractured, why trust in elite institutions is declining, and why democracy itself appears to be retreating, they implied.

In other words, it's all Facebook's fault.

Social media has become a popular scapegoat and common enemy of elite media figures and Democratic politicians (as well as Republican politicians, albeit for opposite reasons), who seized on Russian malfeasance as their preferred explanation for how Donald Trump was able to win the presidency in 2016. This explanation—bad actors, probably Russian, are confusing voters on Facebook, YouTube, Twitter, and other online platforms—is now frequently deployed to explain all sorts of troubling developments, even though studies keep disproving it.

Indeed, Moulton at first used his speaking time to equivocate on whether the government should do more to combat online disinformation, correctly noting that Americans do not like to be censored, even if societal elites think it would be for their own good. But when the subject turned to COVID-19, he confessed that his resolve wavered.

"When I have a constituency that I'm trying to keep healthy, and I can't get them to take a COVID vaccine because of misinformation that's propagated on the internet, that's where this becomes a much tougher, more difficult, bigger concern," he said.

It's no doubt true that misinformation about vaccines has spread online, causing harm. But the COVID-19 pandemic has shown precisely why no central authority can be trusted with the power to restrict allegedly harmful content. Government health bureaucrats, social media content moderators, scientists in good standing with the liberal consensus, and media organizations have all circulated false information about COVID-19.

Sometimes, these were agenda-driven guesses that ended up being wrong, like when The Atlantic described the end of lockdowns as "an experiment in human sacrifice." Sometimes, these were lies in service of some purported greater good, as when top pandemic health bureaucrat Anthony Fauci downplayed the importance of masking and understated the herd immunity threshold. Sometimes, these were baseless claims from slow-moving government agencies, like when the Centers for Disease Control and Prevention (CDC) recommended the strongest masking guidelines for the least at-risk group.

Other times, institutions simply defaulted to knee-jerk censorship until they embarrassingly reversed course, as Facebook did when it finally permitted discussion of the lab leak theory.

None of this means that the pervasiveness and negative impact of false information about COVID-19 vaccines should be written off; assertions that a spate of recent deaths can be attributed to vaccine-induced heart problems are flatly incorrect, pernicious, and worth correcting. But the very experts who would claim for themselves the power to monitor social media and police wrongthink have an astonishingly bad track record of distinguishing falsehood from truth. Facebook's third-party fact-checking partners, for example, routinely flag true statements for moderation.

Jourová noted that the governing body she works for has criminalized hate speech—something that can't happen in the U.S., where the First Amendment prevents such actions. But she also pointed out that most of what is categorized as disinformation is legal speech, and there is clearly a balance to be struck. Bourgault brought up Facebook's Myanmar debacle, in which social media posts contributed to a state-sponsored genocide against the country's Muslim minority—a genuinely appalling case of insufficient moderation creating real-world violence.

But the most alarmist speaker was Sulzberger, who was pessimistic that disinformation could be grappled with unless social media platforms engage in more restriction of disfavored content.

"At some point, given the central role of the platforms in disseminating bad information, I think they're going to have to do an unpopular and brave thing, which is to differentiate and elevate trustworthy sources of information consistently," he said. "Until they do, we have to assume that those environments are poisoned."

It's The New York Times' view—a view quite popular at Davos—that social media is very bad and will continue to be very bad until it awards preferential treatment to The New York Times.

Show Comments (143)